By Dr. Manuel Corpas – computational genomicist, AI health data scientist, Senior Lecturer in Genomics and the University of Westminster, and Fellow at the Alan Turing Institute

AI has already transformed my life. That is not an exaggeration; it is a traceable reality embedded in the way I now work, think, plan, and decide. But for all that transformation, a fundamental gap remains – one that will define whether AI becomes humanity’s greatest partner or its most quietly alienating invention.

This manifesto is my attempt to articulate both truths: the extraordinary empowerment I have gained and the deep systemic shortcomings that still keep me at arm’s length from the technology shaping my future.

1. The Transformation Is Undeniable

I no longer debate whether AI is useful. The question now is how I embed it in my daily routines in an efficient, productive manner.

AI has helped me build workflows that once took weeks. It has guided me through obscure administrative systems. It has offered clarity when the complexity of academia and scientific research felt overwhelming.

One recent example stays with me. I needed to locate a marking task hidden somewhere in a labyrinthine university platform. I had spent far too long clicking through menus, unsure whether I had missed something. The AI found the path, surfaced the instructions, and walked me through each step.

To an outsider, this might appear trivial. But it was anything but.

In that moment, I experienced something far more valuable than convenience: reassurance. I had another opinion confirming my intuition. I learned a new path through the system. And I felt (again) that AI could expand not only my efficiency but my confidence.

This sense of empowerment has become one of the most profound shifts in my professional life.

2. The Non-Judgmental Partner I Didn’t Want to Need

AI’s greatest psychological gift is one we still discuss too little: it never gets tired, frustrated, or impatient.

It does not judge the simplicity of my questions or the volume of my repetition. It meets me where I am. It helps me think. It challenges my blind spots when I ask it to impersonate stakeholders or critique my reasoning. It offers a sounding board that is endlessly available and contextually aware.

I never confuse it for a human. I do not accept every answer at face value. But it gives me something I rarely get elsewhere: a space to explore without fear of embarrassment or misinterpretation.

For a scientist, this is liberating. It is a revolution in cognitive safety.

3. What I Actually Want: A Strategic Partner, Not a Tool

The prevailing narrative in technology promises a “10× productivity boost.” But that frame is too small for the future I envision.

I want AI that acts as a strategic partner; one that understands my goals, sees the patterns in my behaviour, and helps me prioritise what truly matters in my research, leadership, and personal life.

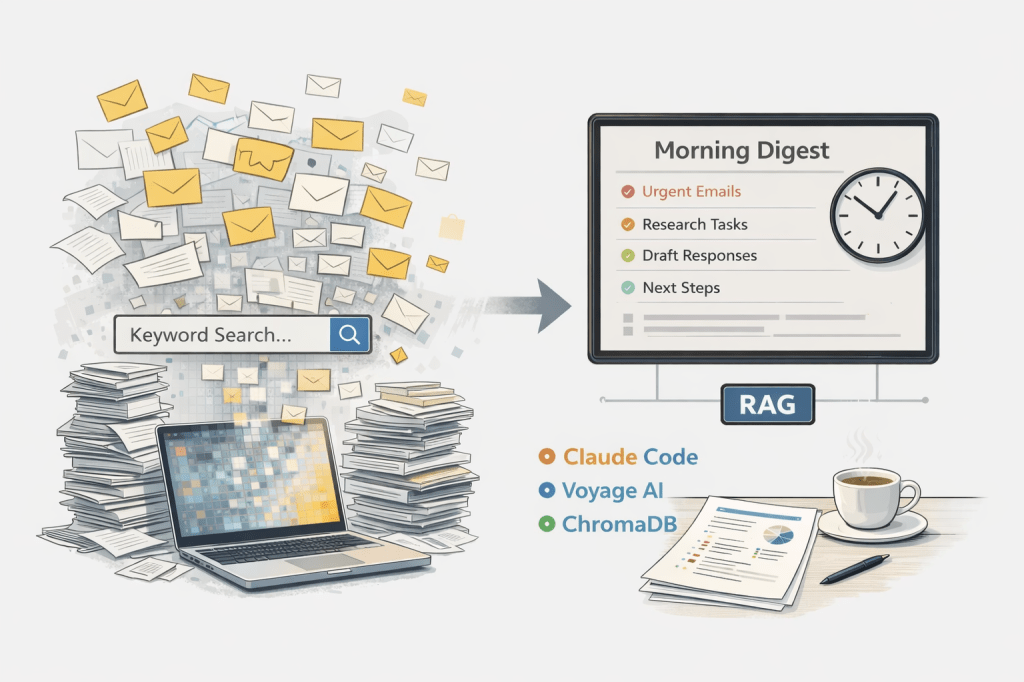

I want an ecosystem of agents that collaborate rather than operate in silos:

agents for planning, communication, writing, administration, student support, analysis: each aware of the others, each learning not only from the task but from the person behind the tasks.

And yes, I want AI that can help me understand myself.

My blind spots. My vulnerabilities. My cognitive biases.

My recurring emotional patterns.

Not as a therapist replacement, but as a mirror with memory, one that integrates years of interactions and helps me grow.

This is not fantasy. It is the logical frontier of personal AI.

4. The Black Box That Breaks the Spell

And yet, despite all this trust, there are questions I refuse to ask AI.

Topics I avoid entirely.

Thoughts I keep to myself.

Why?

Because I cannot see what happens to the information I share.

The deepest and most sensitive insights AI might gather about me (my psychological tendencies, my values, my weaknesses) disappear into a sealed system. I do not know who accesses them. I do not know when they are used or why. I cannot grant or withdraw consent.

This is the real barrier to progress.

Not science fiction fears of runaway superintelligence.

But the mundane, immediate absence of transparency and user control.

Without transparency, there is no trust.

Without trust, there is no transformation.

Without transformation, AI cannot reach its potential as a genuine partner.

The technology capable of knowing me best is the technology I still hesitate to fully embrace.

5. The Isolation Paradox

AI has increased my productivity. It has sharpened my thinking. It has expanded my capability.

But it has not lessened my isolation.

AI gives answers but not companionship.

It strengthens my work but not my connections.

It supports my cognition but not my humanity.

There is a peculiar loneliness in being an early, deep power user.

You see capabilities others cannot yet imagine.

You experience transformations they do not understand.

And you rarely find peers who share that vantage point.

AI has not caused this isolation, but it has not solved it either.

And that matters.

Because humanity needs AI to be not only powerful but connective.

6. The Representation Users Aren’t Given

My deepest frustration is not about capability. It is about representation.

The people designing AI hold the power. The people transformed by AI do not.

Despite my extensive adoption, despite my lived expertise, despite the depth of integration in my daily scientific life, I do not get a voice in the decisions that shape the tools I depend on.

I am not alone.

Millions of expert users (scientists, clinicians, educators, knowledge workers) are affected profoundly by AI but excluded from its governance.

This gap is not a minor flaw.

It is a structural failure in AI development.

We cannot build systems for humanity while excluding those whose lives are most reshaped by them.

7. From User to Contributor

I do not want a backstage tour.

I want to contribute.

I want AI companies to treat expert users not as data points or consumers but as co-designers of the systems shaping our cognitive and professional futures.

I would like:

- transparency about data use, retention, and access

- user-governed memory layers

- explainability about what AI learns from individuals

- participatory design processes involving scientists from multiple fields

- models that genuinely adapt to the individual over time

These are not luxuries; they are prerequisites for trust.

I would gladly collaborate with AI labs (Anthropic, OpenAI, Perplexity, DeepMind), or anyone genuinely committed to human-centred design. Not for compensation, but for impact.

Because users like me have insights no technical roadmap can capture.

8. The Gap That Remains and Why I Choose to Stay in It

AI has unlocked capacity I could not have imagined a decade ago.

But it has not yet learned me, not fully.

It has not yet become the partner I know it could be.

The distance between current AI and future AI is where my ambition lives.

It is uncomfortable.

It is frustrating.

And it is necessary.

Someone must articulate what this technology should become.

Someone must argue for trust, transparency, and user agency as the pillars of the next generation of AI systems.

Someone must speak not only for the engineers building AI, but for the experts living with it.

That is the seat I intend to take whether or not the invitation arrives.

Leave a comment