I spend many hours working with LLMs to help me support my academic writing. This post summarises what I have learned. If you are using LLMs to help write academic papers, grant applications, or technical reports, these observations might save you from some painful mistakes.

You can listen to the extended version for this blog entry either in the attached audio or in my podcast.

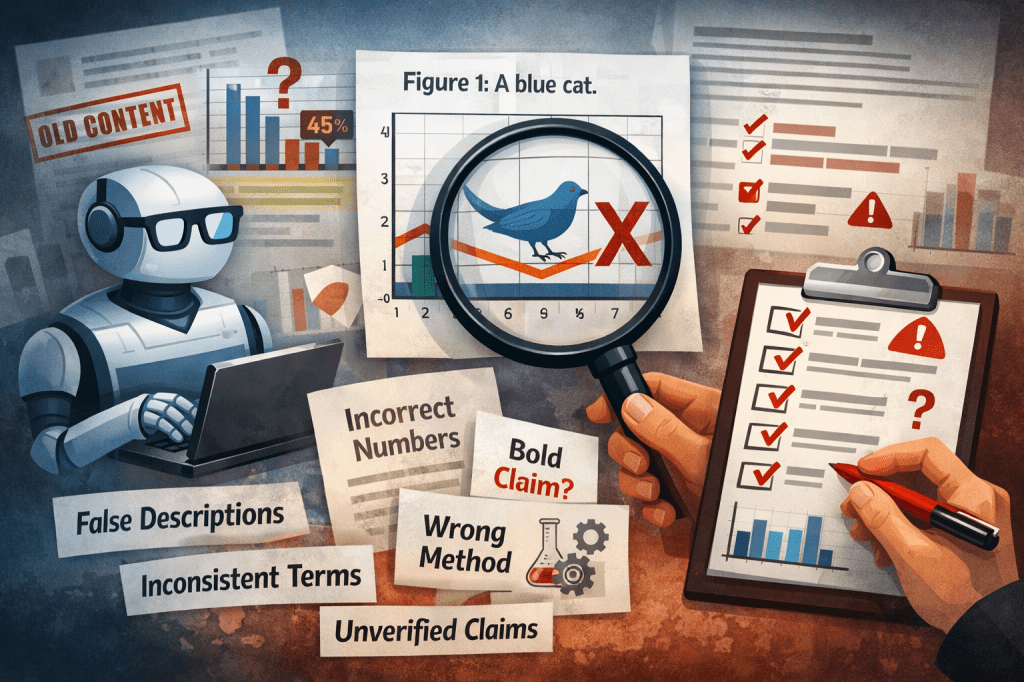

The LLM Cannot See Your Figures

This is my most frequent source of errors. The models cannot write confident, detailed descriptions of figures. The problem is fundamental: LLMs generate text based on what sounds plausible in context. They have no way to verify their descriptions against your actual visual content. Every single figure caption and every sentence that references a figure needs to be checked manually against the image itself.

Old Content Survives Revisions

When I ask the model to update a document, LLMs process text in chunks and do not maintain a global view of document consistency. After any significant revision, you need to search the entire document for terms that should have been replaced. Do not assume the model caught everything.

Numbers Sound Right But Are Not

LLMs are remarkably good at generating numbers that feel appropriate for a given context. They are not good at generating numbers that match your actual data. Every quantitative claim needs verification against your source files, your code output, or your analysis logs. This includes sample sizes, p-values, effect sizes, percentages, and any other numerical assertion.

Claims Expand Beyond the Evidence

LLMs write persuasively. They naturally reach for broader, more impressive-sounding claims. This is useful when drafting, but dangerous when finalising. For each conclusion, ask yourself: did we actually measure this? If the answer involves any hedging, the text probably needs hedging too.

Technical Terms Drift

LLMs tend to treat related terms as interchangeable, so you need to define your terminology clearly and then verify it is used precisely throughout.

Methods Described May Not Be Methods Used

LLMs often describe standard or common approaches rather than your specific implementation. If your Methods section was drafted or revised with LLM assistance, cross-reference every procedure against your actual analysis code.

What This Means for Your Workflow

None of this means LLMs are useless for academic writing. They are genuinely helpful for structuring arguments, improving prose flow, catching grammatical issues, and generating first drafts quickly. But they optimise for fluency and plausibility, not for accuracy against your specific data.

The practical implication is that LLM-assisted writing requires a verification pass that you might not need with purely human-written drafts. You need to check figures against their descriptions, search for outdated content after revisions, verify every number against source data, ensure claims match what was actually measured, confirm technical terms are used consistently, and cross-reference methods against code.

This takes time. But it takes less time than responding to reviewer comments about errors, or worse, correcting a published paper.

The Bottom Line

Think of the LLM as a skilled collaborator who writes well but has never actually seen your data, run your code, or looked at your figures. They are working from your descriptions and their general knowledge, doing their best to produce something coherent and polished.

Your job is to be the fact-checker. The LLM drafts; you verify. That division of labour, properly maintained, can be genuinely productive. But if you skip the verification, you will end up with a manuscript full of confident, well-written errors.

Leave a comment